Using vLLM and Open WebUI in OOD

This guide explains how to correctly launch and access vLLM + Open WebUI through the Open OnDemand (OOD) interface. Make sure you follow this documentation carefully as some of the steps are not completely straightforward.

Launching the Session

-

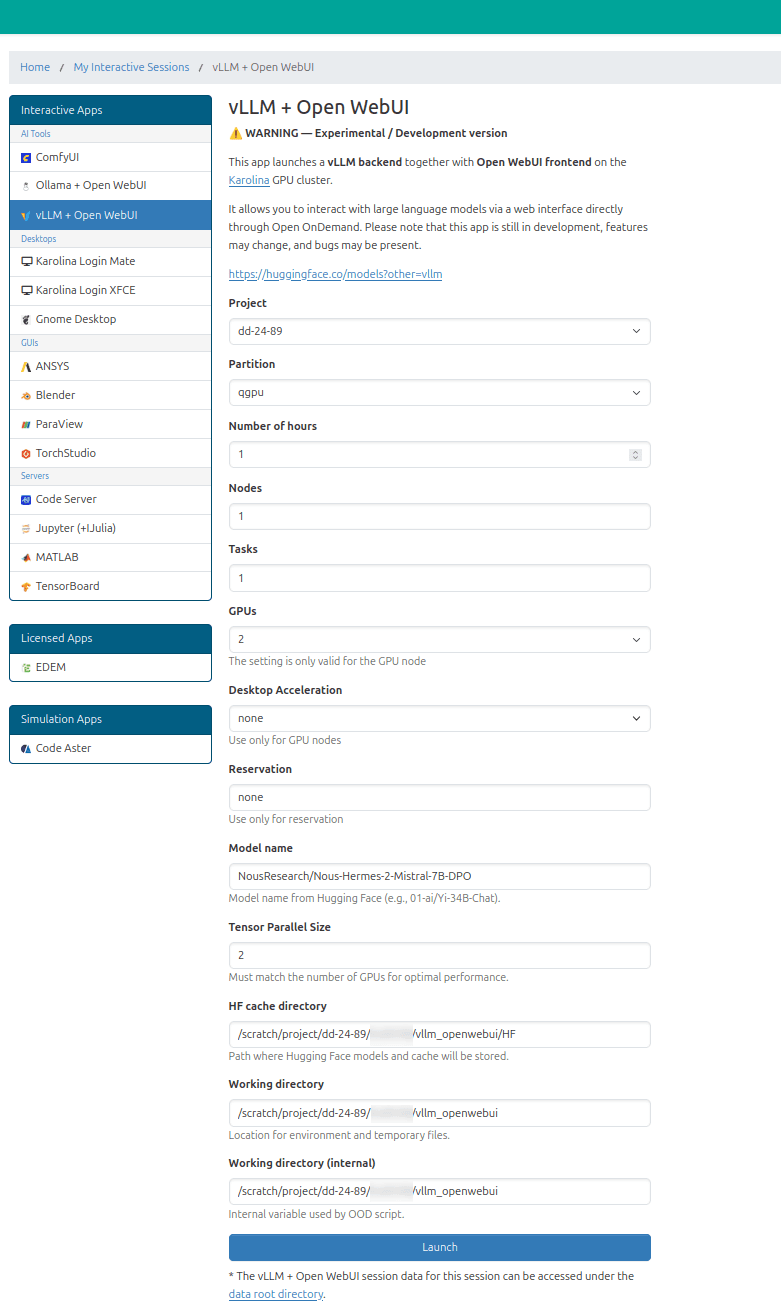

In OOD, start a new session for vLLM + Open WebUI.

-

Enter required parameters including number of GPUs and model name and choose cache and working directories.

-

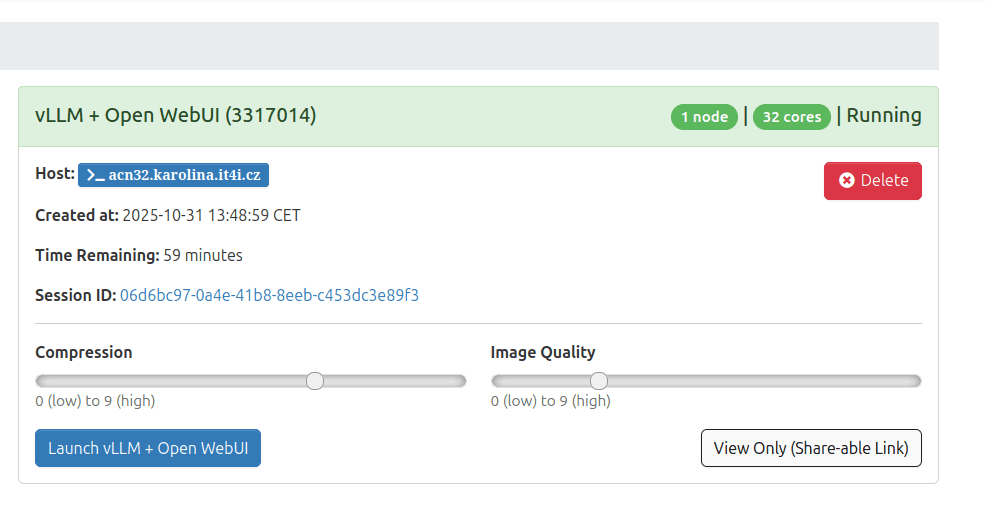

Wait for the session to enter the Running state:

-

Once it is running, do not immediately click on “Launch vLLM + Open WebUI”.

- The backend application may still be initializing or downloading required modules.

- If you start it too early, the application might crash.

How long you need to wait depends on whether:

- You have already launched this application before, and

- Whether the AI modules have already been downloaded.

Monitoring the Log

Since it is not always obvious when the backend is ready, it’s helpful to monitor the log output directly.

-

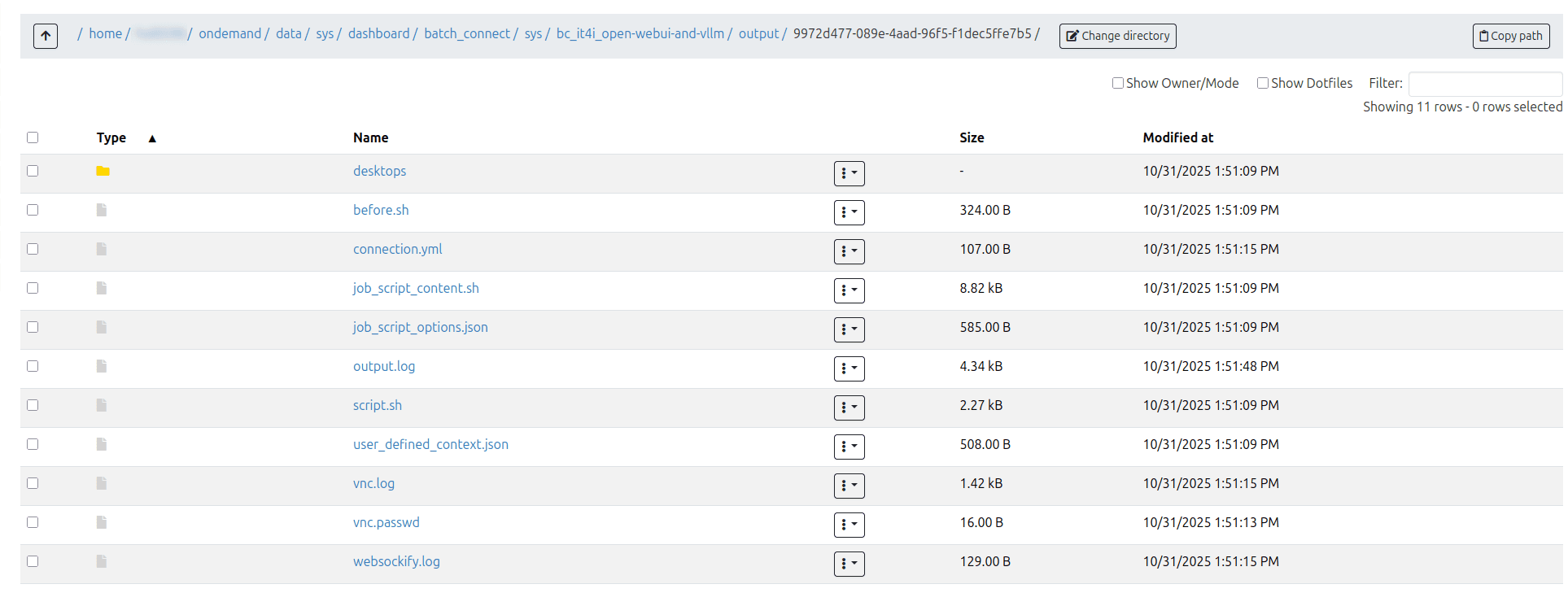

In your session overview in OOD, click on the Session ID (a long alphanumeric string). You’ll be redirected to a file browser view:

-

Locate the file named output.log.

-

Copy its full path (displayed at the top).

-

Open a terminal and run the following command:

tail -f /path/to/output.log -

Wait until you see a log message

INFO: Application startup complete.Note that downloading/loading up the model can take several minutes.

At this point, the backend server (vLLM + Open WebUI) has finished initializing.

Launching the Interface

- Go back to your original OOD session window.

- Now click “Launch vLLM + Open WebUI”.

You will be redirected to the frontend interface of the application. Sometimes, you might encounter a message saying the webpage doesn’t exist — this usually means the frontend wasn’t ready yet.

- Simply press F5 (refresh), and you should be redirected correctly.

- If the issue persists, check the logs again to confirm the backend is fully running.