Resource Allocation Policy¶

Job Queue Policies¶

Resources are allocated to jobs in a fair-share fashion, subject to constraints set by the queue and the resources available to the project. The fair-share system ensures that individual users may consume approximately equal amounts of resources per week. Detailed information can be found in the Job scheduling section.

Resources are accessible via several queues for queueing the jobs. Queues provide prioritized and exclusive access to the computational resources.

Computational resources are subject to accounting policy.

Important

Queues are divided based on a resource type: qcpu_ for non-accelerated nodes and qgpu_ for accelerated nodes.

EuroHPC queues are no longer available. If you are an EuroHPC user, use standard queues based on allocated/required type of resources.

Queues¶

Queue |

Description |

|---|---|

qcpu |

Production queue for non-accelerated nodes intended for standard production runs. Requires an active project with nonzero remaining resources. Full nodes are allocated. Identical to qprod. |

qgpu |

Dedicated queue for accessing the NVIDIA accelerated nodes. Requires an active project with nonzero remaining resources. It utilizes 8x NVIDIA A100 with 320GB HBM2 memory per node. The PI needs to explicitly ask support for authorization to enter the queue for all users associated with their project. On Karolina, you can allocate 1/8 of the node - 1 GPU and 16 cores. For more information, see Karolina qgpu allocation. |

qgpu_big |

Intended for big jobs (>16 nodes), queue priority is lower than production queue prority, priority is temporarily increased every even weekend. |

qcpu_bizqgpu_biz |

Commercial queues, slightly higher priority. |

qcpu_expqgpu_exp |

Express queues for testing and running very small jobs. There are 2 nodes always reserved (w/o accelerators), max 8 nodes available per user. The nodes may be allocated on a per core basis. It is configured to run one job and accept five jobs in a queue per user. |

qcpu_freeqgpu_free |

Intended for utilization of free resources, after a project exhausted all its allocated resources. Note that the queue is not free of charge. Normal accounting applies. Consumed resources will be accounted to the Project. Access to the queue is removed if consumed resources exceed 150% of the allocation. Full nodes are allocated. |

qcpu_long |

Queues for long production runs. Require an active project with nonzero remaining resources. Only 200 nodes without acceleration may be accessed. Full nodes are allocated. |

qcpu_preemptqgpu_preempt |

Free queues with the lowest priority (LP). The queues require a project with allocation of the respective resource type. There is no limit on resource overdraft. Jobs are killed if other jobs with a higher priority (HP) request the nodes and there are no other nodes available. LP jobs are automatically re-queued once HP jobs finish, so make sure your jobs are re-runnable. |

qdgx |

Queue for DGX-2, accessible from Barbora. |

qfat |

Queue for fat node, PI must request authorization to enter the queue for all users associated to their project. |

qviz |

Visualization queue Intended for pre-/post-processing using OpenGL accelerated graphics. Each user gets 8 cores of a CPU allocated (approx. 64 GB of RAM and 1/8 of the GPU capacity (default "chunk")). If more GPU power or RAM is required, it is recommended to allocate more chunks (with 8 cores each) up to one whole node per user. This is currently also the maximum allowed allocation per one user. One hour of work is allocated by default, the user may ask for 2 hours maximum. |

See the following subsections for the list of queues:

Queue Notes¶

The job time limit defaults to half the maximum time, see the table above. Longer time limits can be set manually, see examples.

Jobs that exceed the reserved time limit get killed automatically.

The time limit can be changed for queuing jobs (state Q) using the scontrol modify job command,

however it cannot be changed for a running job.

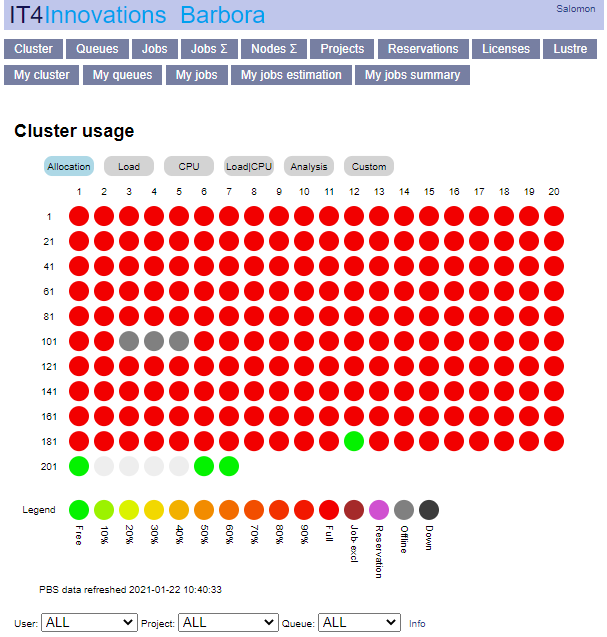

Queue Status¶

Tip

Check the status of jobs, queues and compute nodes here.

Display the queue status:

$ sinfo -s

The Slurm allocation overview may also be obtained using the rsslurm command:

$ rsslurm

Usage: rsslurm [options]

Options:

--version show program's version number and exit

-h, --help show this help message and exit

--get-server-details Print server

--get-queues Print queues

--get-queues-details Print queues details

--get-reservations Print reservations

--get-reservations-details

Print reservations details

...

..

.